本文旨在开发一个能够准确检测和分割视频中物体的计算机视觉系统。我将使用最先进的三种SoA(State-of-the-Art)方法:YOLO、SSD和Faster R-CNN,并评估它们的性能。然后,我通过视觉分析结果,突出它们的优缺点。接下来,我根据评估和分析确定表现最佳的方法。我将提供一个链接,展示最佳方法在视频中的表现。

1. YOLO(You Only Look Once)

YOLOv8等深度学习模型在机器人、自动驾驶和视频监控等多个行业中变得至关重要。这些模型能够实时检测物体,并对安全和决策过程产生影响。YOLOv8(You Only Look Once)利用计算机视觉技术和机器学习算法,以高速度和准确性识别图像和视频中的物体。这使得高效且准确的物体检测成为可能,这在许多应用中至关重要(Keylabs, 2023)。

实现细节

我创建了一个run_model函数来实现物体检测和分割。该函数接收三个参数作为输入:模型、输入视频和输出视频。它逐帧读取视频,并将输入视频的结果可视化到帧上。然后,注释后的帧被保存到输出视频文件中,直到所有帧都被处理完毕或用户按下“q”键停止处理。

我使用YOLO模型(yolov8n.pt,“v8”)进行物体检测,该模型显示带有检测到的边界框的视频。同样,对于物体分割,使用具有分割特定权重的YOLO模型(yolov8n-seg.pt)生成带有分割物体的视频。

复制def run_model(model, video, output_video):

model = model

cap = cv2.VideoCapture(video)

# Create a VideoWriter object

fourcc = cv2.VideoWriter_fourcc(*'mp4v')

# Get frame width and height

frame_width = int(cap.get(cv2.CAP_PROP_FRAME_WIDTH))

frame_height = int(cap.get(cv2.CAP_PROP_FRAME_HEIGHT))

out = cv2.VideoWriter(output_video, fourcc, 20.0, (frame_width, frame_height))

if not cap.isOpened():

print("Cannot open camera")

exit()

while True:

# Capture frame-by-frame

ret, frame = cap.read()

if not ret:

print("No frame...")

break

# Predict on image

results = model.track(source=frame, persist=True, tracker='bytetrack.yaml')

frame = results[0].plot()

# Write the frame to the output video file

out.write(frame)

# Display the resulting frame

cv2.imshow("ObjectDetection", frame)

# Terminate run when "Q" pressed

if cv2.waitKey(1) == ord("q"):

break

# When everything done, release the capture

cap.release()

# Release the video recording

# out.release()

cv2.destroyAllWindows()

# Object Detection

run_model(model=YOLO('yolov8n.pt', "v8"), video=VIDEO, output_video=OUTPUT_VIDEO_YOLO_DET)

# Object Segmentation

run_model(model=YOLO('yolov8n-seg.pt', "v8"), video=VIDEO, output_video=OUTPUT_VIDEO_YOLO_SEG)2. Faster R-CNN(基于区域的卷积神经网络)

Faster R-CNN是一种最先进的物体检测模型。它有两个主要组件:一个深度全卷积区域提议网络和一个Fast R-CNN物体检测器。它使用区域提议网络(RPN),该网络与检测网络共享全图像卷积特征(Ren等,2015)。RPN是一个全卷积神经网络,生成高质量的提议。然后,Fast R-CNN使用这些提议进行物体检测。这两个模型被组合成一个单一的网络,RPN指导在哪里寻找物体(Ren等,2015)。

(1) 使用Faster R-CNN进行物体检测

为了实现物体检测,我创建了两个函数:get_model和detect_and_draw_boxes。get_model函数加载一个预训练的Faster R-CNN模型,该模型是torchvision库的一部分,并在COCO数据集上使用ResNet-50-FPN骨干网络进行预训练。我将模型设置为评估模式。然后,detect_and_draw_boxes函数对单个视频帧进行物体检测,并在检测到的物体周围绘制边界框。它将帧转换为张量并传递给模型。该模型返回预测结果,包括检测到的物体的边界框、标签和分数。置信度分数高于0.9的边界框,以及指示类别和置信度分数的标签被添加。

复制

def get_model():

# Load a pre-trained Faster R-CNN model

weights = FasterRCNN_ResNet50_FPN_Weights.DEFAULT

model = fasterrcnn_resnet50_fpn(weights=weights, pretrained=True)

model.eval()

return model

def faster_rcnn_object_detection(model, frame):

# Transform frame to tensor and add batch dimension

transform = T.Compose([T.ToTensor()])

frame_tensor = transform(frame).unsqueeze(0)

with torch.no_grad():

prediction = model(frame_tensor)

bboxes, labels, scores = prediction[0]["boxes"], prediction[0]["labels"], prediction[0]["scores"]

# num = torch.argwhere(scores > 0.9).shape[0]

# Draw boxes and labels on the frame

for i in range(len(prediction[0]['boxes'])):

xmin, ymin, xmax, ymax = bboxes[i].numpy().astype('int')

class_name = COCO_NAMES[labels.numpy()[i] -1]

if scores[i] > 0.9: # Only draw boxes for confident predictions

cv2.rectangle(frame, (xmin, ymin), (xmax, ymax), (0, 255, 0), 3)

# Put label

label = f"{class_name}: {scores[i]:.2f}"

cv2.putText(frame, label, (xmin, ymin - 10), FONT, 0.5, (255, 0, 0), 2, cv2.LINE_AA)

return frame

# Set up the model

model = get_model()

# Video capture setup

cap = cv2.VideoCapture(VIDEO)

fourcc = cv2.VideoWriter_fourcc(*'mp4v')

# Get frame width and height

frame_width = int(cap.get(cv2.CAP_PROP_FRAME_WIDTH))

frame_height = int(cap.get(cv2.CAP_PROP_FRAME_HEIGHT))

out = cv2.VideoWriter(OUTPUT_VIDEO_FASTER_RCNN_DET, fourcc, 20.0, (frame_width, frame_height))

while cap.isOpened():

ret, frame = cap.read()

if not ret:

print("No frame...")

break

# Process frame

processed_frame = faster_rcnn_object_detection(model, frame)

# Write the processed frame to output

out.write(processed_frame)

# Display the frame

cv2.imshow('Frame', processed_frame)

if cv2.waitKey(1) & 0xFF == ord('q'):

break

# Release everything is finished

cap.release()

out.release()

cv2.destroyAllWindows()(2) 使用Faster R-CNN进行物体分割

为了实现物体分割,我创建了函数来加载预训练的Mask R-CNN模型、预处理视频帧、应用分割并将掩码覆盖在帧上。首先,我使用从torchvision库加载的预训练Mask R-CNN模型,该模型具有ResNet-50-FPN骨干网络,并将其设置为评估模式。我在COCO数据集上训练了该模型。然后,preprocess_frame函数对每个视频帧进行预处理并将其转换为张量。接下来,apply_segmentation函数对预处理后的帧应用分割过程,overlay_masks函数将分割掩码覆盖在帧上,绘制边界框,并为置信度较高的检测添加标签。这涉及通过置信度阈值过滤检测结果、覆盖掩码、绘制矩形和添加文本标签。

复制

# Load the pre-trained Mask R-CNN model

model = maskrcnn_resnet50_fpn(pretrained=True)

model.eval()

# Function to overlay masks and draw rectangles and labels on the frame

def faster_rcnn_object_segmentation(frame, threshold=0.9):

# Function to preprocess the frame

transform = T.Compose([T.ToTensor()])

frame_tensor = transform(frame).unsqueeze(0)

with torch.no_grad():

predictions = model(frame_tensor)

labels = predictions[0]['labels'].cpu().numpy()

masks = predictions[0]['masks'].cpu().numpy()

scores = predictions[0]['scores'].cpu().numpy()

boxes = predictions[0]['boxes'].cpu().numpy()

overlay = frame.copy()

for i in range(len(masks)):

if scores[i] > threshold:

mask = masks[i, 0]

mask = (mask > 0.6).astype(np.uint8)

color = np.random.randint(0, 255, (3,), dtype=np.uint8)

overlay[mask == 1] = frame[mask == 1] * 0.5 + color * 0.5

xmin, ymin, xmax, ymax = boxes[i].astype('int')

class_name = COCO_NAMES[labels[i] - 1]

# Draw rectangle

cv2.rectangle(overlay, (xmin, ymin), (xmax, ymax), (0, 255, 0), 2)

# Put label

label = f"{class_name}: {scores[i]:.2f}"

cv2.putText(overlay, label, (xmin, ymin - 10), FONT, 0.5, (255, 0, 0), 2, cv2.LINE_AA)

return overlay

# Capture video

cap = cv2.VideoCapture(VIDEO)

fourcc = cv2.VideoWriter_fourcc(*'mp4v')

# Get frame width and height

frame_width = int(cap.get(cv2.CAP_PROP_FRAME_WIDTH))

frame_height = int(cap.get(cv2.CAP_PROP_FRAME_HEIGHT))

out = cv2.VideoWriter(OUTPUT_VIDEO_FASTER_RCNN_SEG, fourcc, 20.0, (frame_width, frame_height))

while cap.isOpened():

ret, frame = cap.read()

if not ret:

print("No frame...")

break

# Overlay masks

processed_frame = faster_rcnn_object_segmentation(frame)

# Write the processed frame to output

out.write(processed_frame)

# Display the frame

cv2.imshow('Frame', processed_frame)

if cv2.waitKey(1) & 0xFF == ord('q'):

break

# Release everything is finished

cap.release()

out.release()

cv2.destroyAllWindows()3. SSD(单次多框检测器)

SSD,即单次多框检测器,是一种使用单一深度神经网络在图像中进行物体检测的方法。它将边界框的输出空间离散化为每个特征图位置上具有不同纵横比和尺度的一组默认框。在预测过程中,网络为每个默认框中每个物体类别的存在生成分数,并调整框以更好地匹配物体形状。SSD结合了来自不同分辨率的多个特征图的预测,以有效处理各种大小的物体,消除了提议生成和重采样阶段的需要,从而简化了训练过程并集成到检测系统中(Liu等,2016)。

(1) 使用SSD进行物体检测

我创建了一个ssd_object_detection函数,该函数使用预训练的SSD模型,处理视频帧,应用检测并在检测到的物体周围绘制边界框,以实现使用SSD(单次多框检测器)模型的物体检测。

复制

# Load the pre-trained SSD model

model = ssd300_vgg16(pretrained=True)

model.eval()

def ssd_object_detection(frame, threshold=0.5):

# Function to preprocess the frame

transform = T.Compose([T.ToTensor()])

frame_tensor = transform(frame).unsqueeze(0)

with torch.no_grad():

predictions = model(frame_tensor)

labels = predictions[0]['labels'].cpu().numpy()

scores = predictions[0]['scores'].cpu().numpy()

boxes = predictions[0]['boxes'].cpu().numpy()

for i in range(len(boxes)):

if scores[i] > threshold:

xmin, ymin, xmax, ymax = boxes[i].astype('int')

class_name = COCO_NAMES[labels[i] - 1]

# Draw rectangle

cv2.rectangle(frame, (xmin, ymin), (xmax, ymax), (0, 255, 0), 2)

# Put label

label = f"{class_name}: {scores[i]:.2f}"

cv2.putText(frame, label, (xmin, ymin - 10), FONT, 0.5, (255, 0, 0), 2, cv2.LINE_AA)

return frame

# Capture video

cap = cv2.VideoCapture(VIDEO)

fourcc = cv2.VideoWriter_fourcc(*'mp4v')

# Get frame width and height

frame_width = int(cap.get(cv2.CAP_PROP_FRAME_WIDTH))

frame_height = int(cap.get(cv2.CAP_PROP_FRAME_HEIGHT))

out = cv2.VideoWriter(OUTPUT_VIDEO_SSD_DET, fourcc, 20.0, (frame_width, frame_height))

while cap.isOpened():

ret, frame = cap.read()

if not ret:

print("No frame...")

break

# Overlay masks

processed_frame = ssd_object_detection(frame)

# Write the processed frame to output

out.write(processed_frame)

# Display the frame

cv2.imshow('Frame', processed_frame)

if cv2.waitKey(1) & 0xFF == ord('q'):

break

# Release everything is finished

cap.release()

out.release()

cv2.destroyAllWindows()(2) 使用SSD进行物体分割

同样,我创建了ssd_object_segmentation函数,该函数加载预训练模型,处理视频帧,应用分割并在检测到的物体上绘制掩码和标签,以实现物体分割。

复制

# Load the pre-trained SSD model

model = ssd300_vgg16(pretrained=True)

model.eval()

def ssd_object_segmentation(frame, threshold=0.5):

# Function to preprocess the frame

transform = T.Compose([T.ToTensor()])

frame_tensor = transform(frame).unsqueeze(0)

with torch.no_grad():

predictions = model(frame_tensor)

labels = predictions[0]['labels'].cpu().numpy()

scores = predictions[0]['scores'].cpu().numpy()

boxes = predictions[0]['boxes'].cpu().numpy()

for i in range(len(boxes)):

if scores[i] > threshold:

xmin, ymin, xmax, ymax = boxes[i].astype('int')

class_name = COCO_NAMES[labels[i] - 1]

# Extract the detected object from the frame

object_segment = frame[ymin:ymax, xmin:xmax]

# Convert to grayscale and threshold to create a mask

gray = cv2.cvtColor(object_segment, cv2.COLOR_BGR2GRAY)

_, mask = cv2.threshold(gray, 127, 255, cv2.THRESH_BINARY_INV | cv2.THRESH_OTSU)

# Find contours

contours, _ = cv2.findContours(mask, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)

# Draw the contours on the original frame

cv2.drawContours(frame[ymin:ymax, xmin:xmax], contours, -1, (0, 255, 0), thickness=cv2.FILLED)

# Put label above the box

label = f"{class_name}: {scores[i]:.2f}"

cv2.putText(frame, label, (xmin, ymin - 10), FONT, 0.5, (255, 0, 0), 2, cv2.LINE_AA)

return frame

# Capture video

cap = cv2.VideoCapture(VIDEO) # replace with actual video file path

fourcc = cv2.VideoWriter_fourcc(*'mp4v')

# Get frame width and height

frame_width = int(cap.get(cv2.CAP_PROP_FRAME_WIDTH))

frame_height = int(cap.get(cv2.CAP_PROP_FRAME_HEIGHT))

out = cv2.VideoWriter(OUTPUT_VIDEO_SSD_SEG, fourcc, 20.0, (frame_width, frame_height))

while cap.isOpened():

ret, frame = cap.read()

if not ret:

print("No frame...")

break

# Overlay segmentation masks

processed_frame = ssd_object_segmentation(frame)

# Write the processed frame to output

out.write(processed_frame)

# Display the frame

cv2.imshow('Frame', processed_frame)

if cv2.waitKey(1) & 0xFF == ord('q'):

break

# Release everything once finished

cap.release()

out.release()

cv2.destroyAllWindows()4.评估

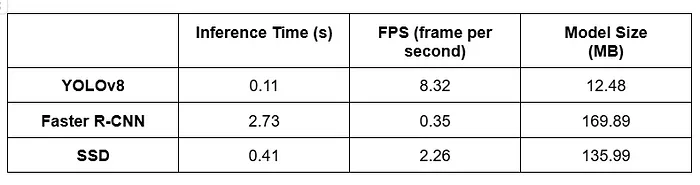

在本节中,我将评估并比较三种流行的物体检测模型:YOLO(You Only Look Once)、Faster R-CNN(基于区域的卷积神经网络)和SSD(单次多框检测器)。我在CPU设备上工作,而不是CUDA。评估阶段包括:

- 每秒帧数(FPS):FPS衡量每个模型每秒处理的帧数。

- 推理时间:推理时间表示每个模型检测帧中物体所需的时间。

- 模型大小:模型大小表示每个模型占用的磁盘空间。

(1) 性能差异讨论

从评估结果中,我观察到以下内容:

- 速度:YOLO在FPS和推理时间方面优于Faster R-CNN和SSD。这表明它适用于实时应用。

- 准确性:Faster R-CNN在准确性上往往优于YOLO和SSD,表明在物体检测任务中具有更好的准确性。

- 模型大小:YOLO的模型大小最小,这使得它在存储容量有限的设备上具有优势。

(2) 最佳表现方法

根据评估结果和定性分析,YOLO8v是视频序列中物体检测和分割的最佳SoA方法。其卓越的速度、紧凑的模型大小和强大的性能使其成为在实际应用中准确性和效率至关重要的理想选择。

完整项目代码和视频:https://github.com/fatimagulomova/iu-projects/blob/main/DLBAIPCV01/MainProject.ipynb